Ilya Sutskever: The brain behind ChatGPT

ChatGPT may be stealing the spotlight, but let's not forget about the silent genius behind it.

Meet Ilya Sutskever, the mind behind the machine.

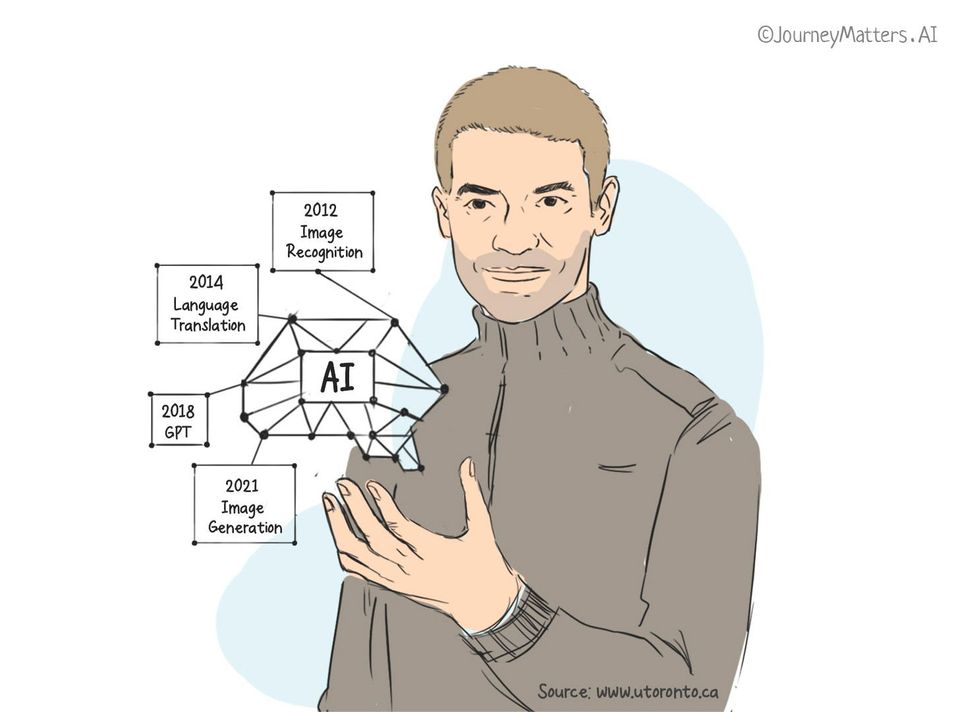

He is a pioneer in the field of Artificial Intelligence and has played a vital role in shaping the current landscape of AI and continues to push the boundaries of what is possible with machine learning.

Summary

Sutskever is the Co-Founder and Chief Scientist of OpenAI, a research organization dedicated to advancing AI in a responsible and safe manner. Under his leadership, the organization has made significant strides in developing cutting-edge technologies and advancing the field of AI.

In this article, we will explore his journey from a young researcher to one of the leading figures in the field of artificial intelligence. Whether you are an AI enthusiast, a researcher, or simply someone who is curious about the inner workings of this field, this article is sure to provide you with valuable insights and information.

The article follows the following timeline:

2003: First impression of Ilya Sutskever

2011: Idea of AGI

2012: Revolution in image recognition

2013: Auction of DNNresearch to Google

2014: Revolution in language translation

2015: From Google to OpenAI: A new chapter in AI

2018: Development of GPT 1, 2 & 3

2021: Development of DALL-E 1

2022: Unveiled ChatGPT to the world

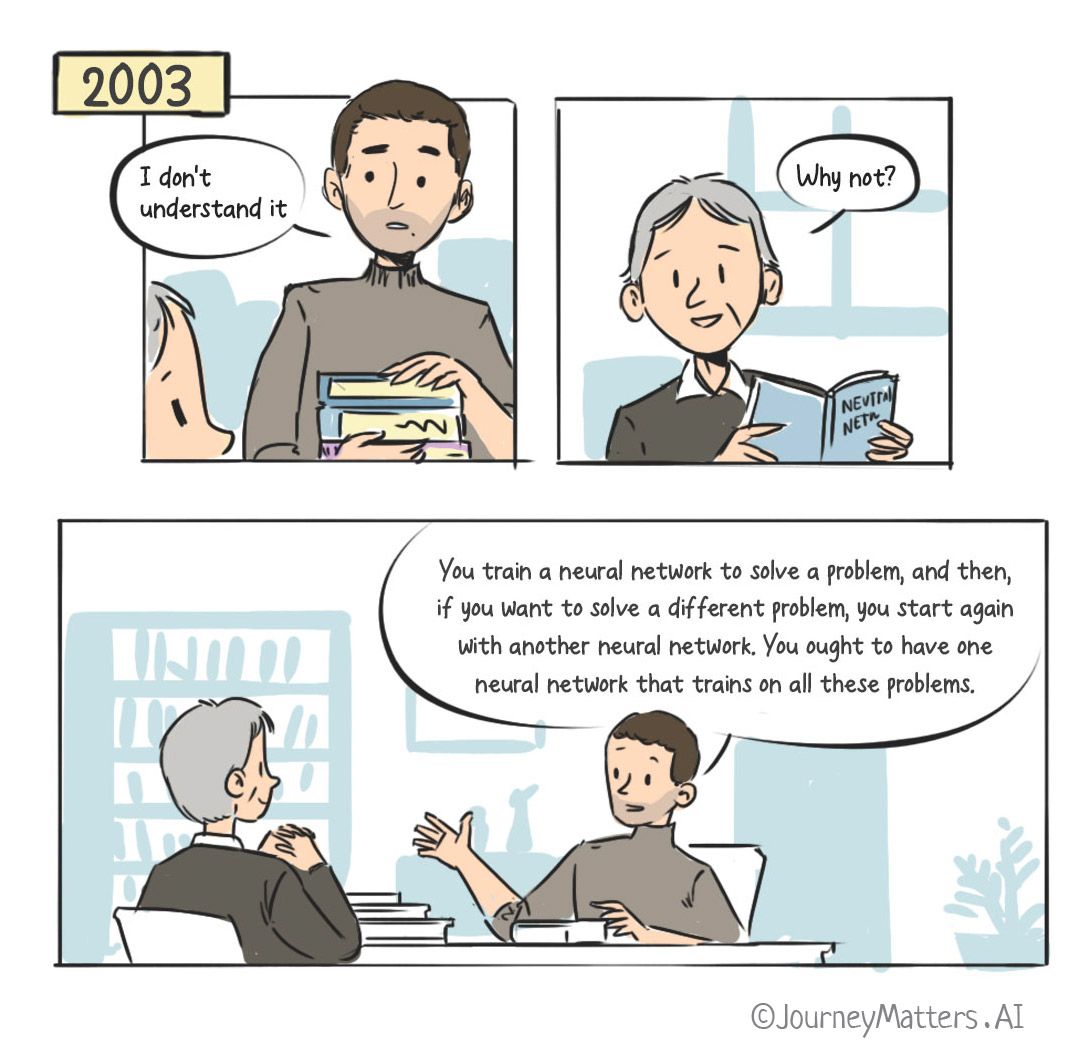

2003: First impression of Ilya Sutskever

Sutskever wanted to join Professor Geoffrey Hinton’s deep learning lab when he was an undergrad at the University of Toronto. One day, he knocked on Professor Hinton’s office doors and asked if he could join the lab. The professor asked him to make an appointment. Without wasting any more time, Sutskever immediately asked, “How about now?”

Hinton found Sutskever to be a sharp student and gave him two papers to read.

Sutskever returned a week later and told the professor that he didn't understand. When the professor asked, “why not?" he explained:

“You train a neural network to solve a problem, and then, if you want to solve a different problem, you start again with another neural network, and you train it to solve a different problem. You ought to have one neural network that trains on all these problems.”

Realizing Sutskever's unique ability to arrive at conclusions that even experienced researchers took years to find, Hinton extended an invitation for him to join his lab.

2011: Idea of AGI

When Sutskever was still at the University of Toronto, he flew to London for a job at DeepMind. There he met with Demis Hassabis and Shane Legg (Cofounders of DeepMind), who were building AGI (Artificial General Intelligence). AGI is a type of AI that has the ability to think and reason like a human and can perform a wide variety of tasks that we associate with human intelligence, such as understanding natural language, learning from experience, making decisions, and solving problems.

At the time, AGI was not something that serious researchers talked about. Sutskever thought they’d lost touch with reality, so he turned down the job and returned to the University, ultimately ending up at Google in 2013.

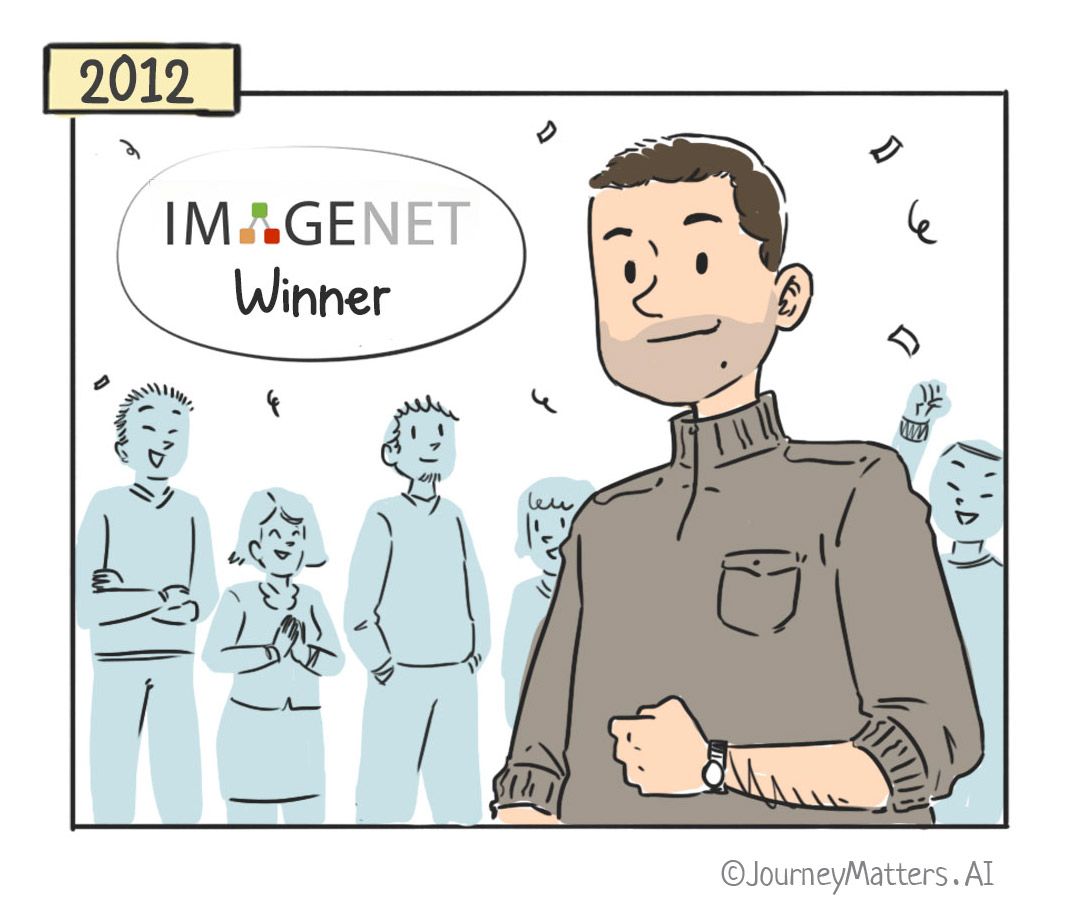

2012: Revolution in image recognition

Geoffrey Hinton believed in Deep Learning when no one else did. He was convinced that achieving success in the ImageNet competition would settle the argument once and for all.

Every year, a Stanford lab hosted the ImageNet contest. It consisted of a massive database of meticulously labeled photos. Researchers from all over the world participated in the competition, trying to create a system that could recognize the highest number of images.

Hinton had two of his students, Ilya Sutskever and Alex Krizhevsky, working for the competition.

They won the ImageNet Competition. Their system was later named AlexNet.

Image recognition has never been the same since then.

Later, Sutskever, Krizhevsky, and Hinton published a paper about AlexNet, which became one of the most highly cited papers in the field of computer science, with over 60,000 citations from other researchers.

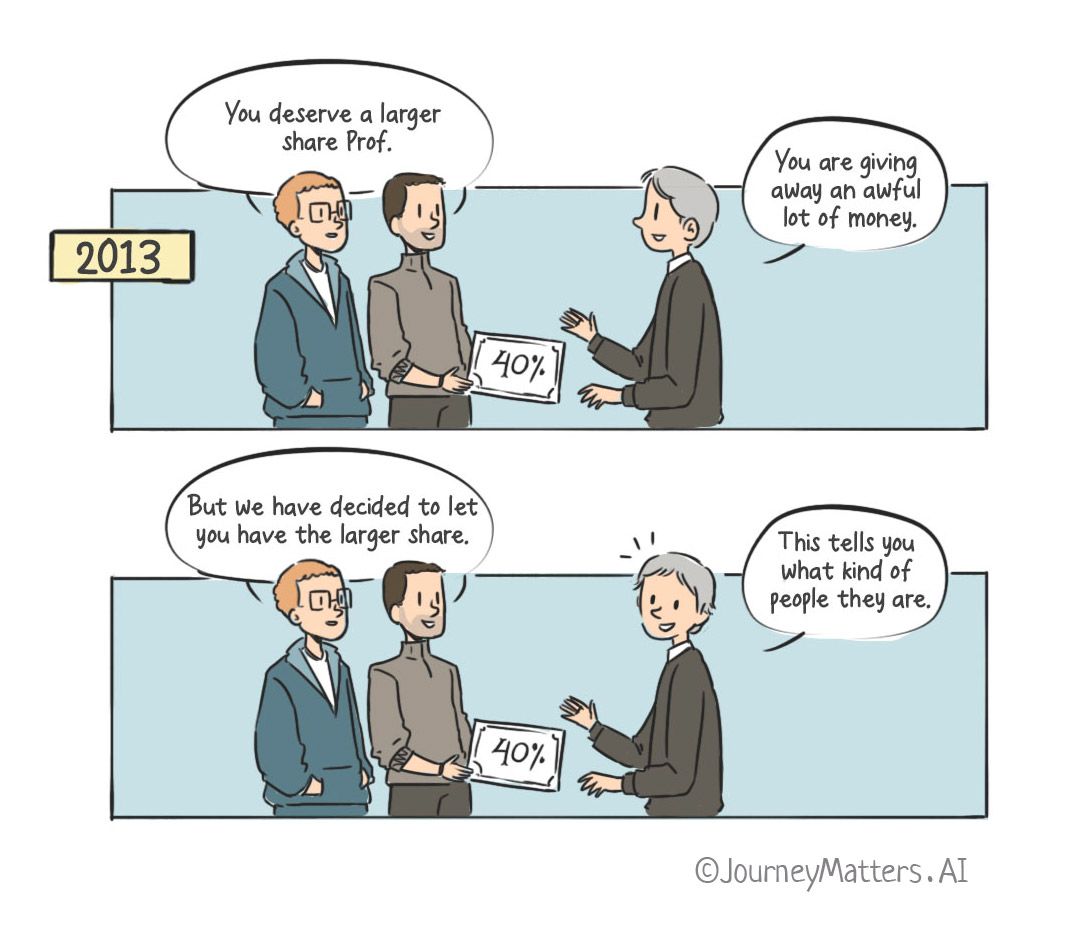

2013: Auction of DNNresearch to Google

Hinton, along with Sutskever and Krizhevsky, formed a new company named DNNresearch. They did not have any products and had no plans to make any in the future.

Hinton asked a lawyer how to make his new company worth the most money even though it only had three employees, no products, and no history. One of the options that the lawyer gave him was to set up an auction.

Four companies made bids for the acquisition: Baidu, Google, Microsoft, and a young London-based startup named DeepMind. The first to drop out was DeepMind, followed by Microsoft, leaving Baidu and Google to compete.

One evening, close to midnight, as the price hit $44 million, Hinton suspended the bidding and went to get some sleep. The next day, he announced that the auction was over. Hinton sold his company to Google for $44 million. He believed finding the right home for his research was more important. Both Hinton and his students prioritized their ideas over financial gain.

When the time came to split the proceeds equally, Sutskever and Krizhevsky insisted Hinton deserved a larger share (40%), despite Hinton suggesting they sleep on it. The next day, they still felt the same. Hinton later commented that “it tells you what kind of people they are, not what kind of person I am.”

After this, Google hired Sutskever as a research scientist at Google Brain, where his ideas got bigger and were now more closely aligned with those of the founders of DeepMind. He started believing that the future (AGI) was just around the corner. Sutskever was also not one to be afraid of changing his mind when faced with new information or experiences.

Believing in AGI required a leap of faith, and as Sergey Levine (a coworker of Sutskever at Google) said about Sutskever, “He is somebody who is not afraid to believe.”

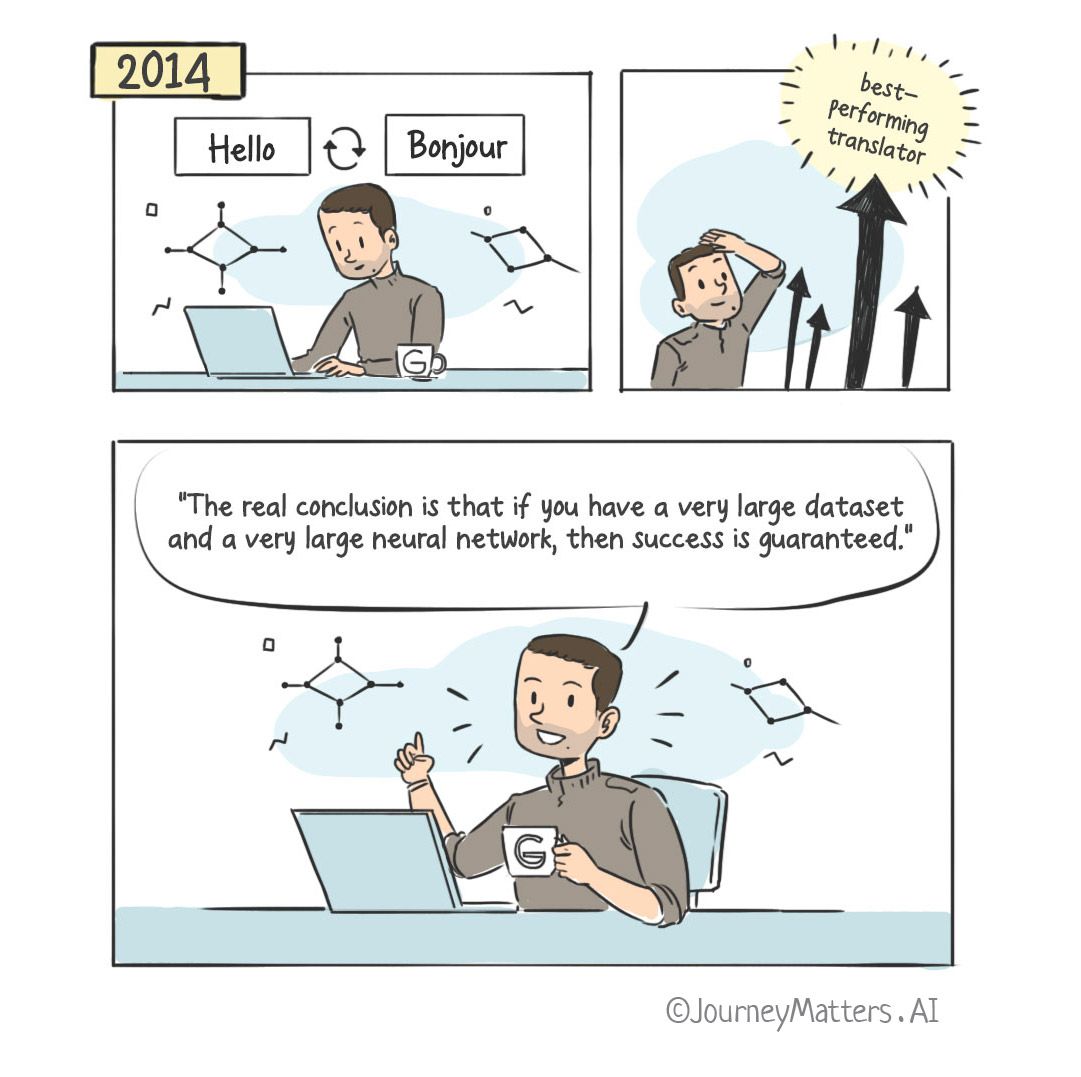

2014: Revolution in language translation

After acquiring DNNResearch, Google hired Sutskever as a research scientist at Google Brain.

While at Google, Sutskever invented a variant of a neural network to translate English to French. He proposed Sequence to Sequence Learning[1] which captures the sequential structure of the input (such as a sentence in English) and maps it to an output that also has sequential structures (such as a sentence in French).

He said[2] that researchers didn’t believe that neural networks could do translation, so it was a big surprise when they could.

His invention beat the best-performing translator and provided a major upgrade to Google Translate. Language translation has never been the same.

2015: From Google to OpenAI: A new chapter in AI

Sam Altman and Greg Brockman brought together Sutskever and nine other researchers to check if it was still possible to make a research lab with the best minds in the field.

As they discussed about the lab that would become OpenAI, Sutskever felt he'd found a group of like-minded individuals who shared his beliefs and aspirations.

Brockman extended an invitation to all ten researchers to join his lab and gave them a three-week period to decide. When Google came to know about this, it offered Sutskever a substantial amount to join them. When he declined it, Google increased their offer to nearly $2 million for the first year, which was two or three times what OpenAI was going to pay him.

But Sutskever happily gave up his multi-million dollar job offer at Google to finally become co-founder of the non-profit OpenAI.

The goal of OpenAI was to use AI to benefit all of humanity and advance AI in a responsible way.

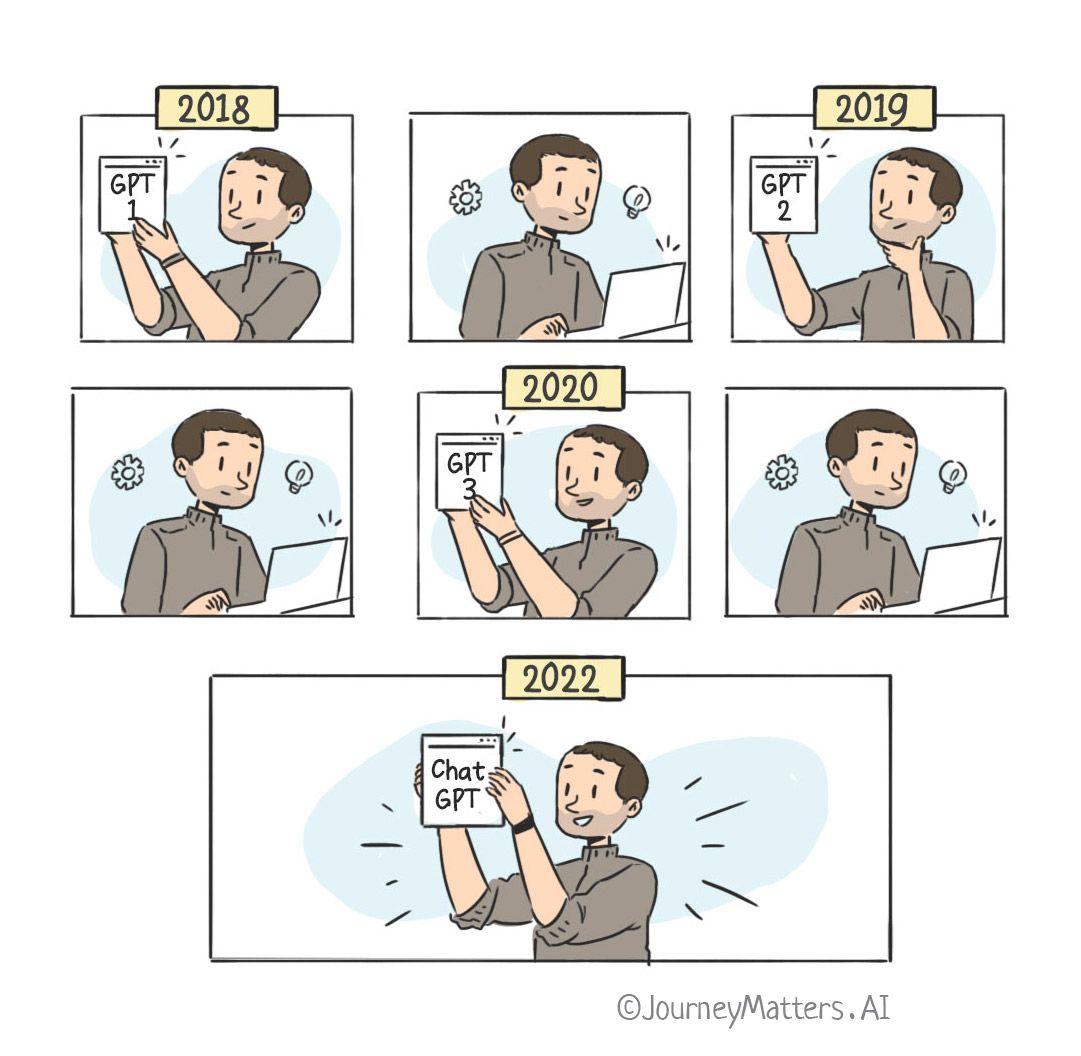

2018: Development of GPT 1, 2 & 3

Sutskever led OpenAI's invention of GPT-1, which has subsequently grown to GPT-2, GPT-3, and ChatGPT.

- GPT-1 (2018): This is the first model in the series and was trained on a massive dataset of internet text. One of its key innovations was the use of unsupervised pre-training, in which the model learns to predict words in a sentence based on the context of the words that come before it. This allows the model to learn the structure of language and generate human-like text.

- GPT-2 (2019): Built on the success of GPT-1, it was trained on an even larger dataset, resulting in an even more powerful model. One of the major advancements of GPT-2 is its ability to generate coherent and fluent paragraphs of text on a wide range of topics, making it a key player in unsupervised language understanding and generation tasks.

- GPT-3 (2020): It was a substantial leap forward in both size and performance. It was trained on a massive dataset and used 175 billion parameters, much larger than the previous models. GPT-3 achieved state-of-the-art performance on a wide range of language tasks, such as question answering, machine translation, and summarization, with near human-like abilities. It also showed the ability to perform simple coding tasks, write coherent news articles and even generate poetry.

GPT-4 is expected sometime soon, in 2023.

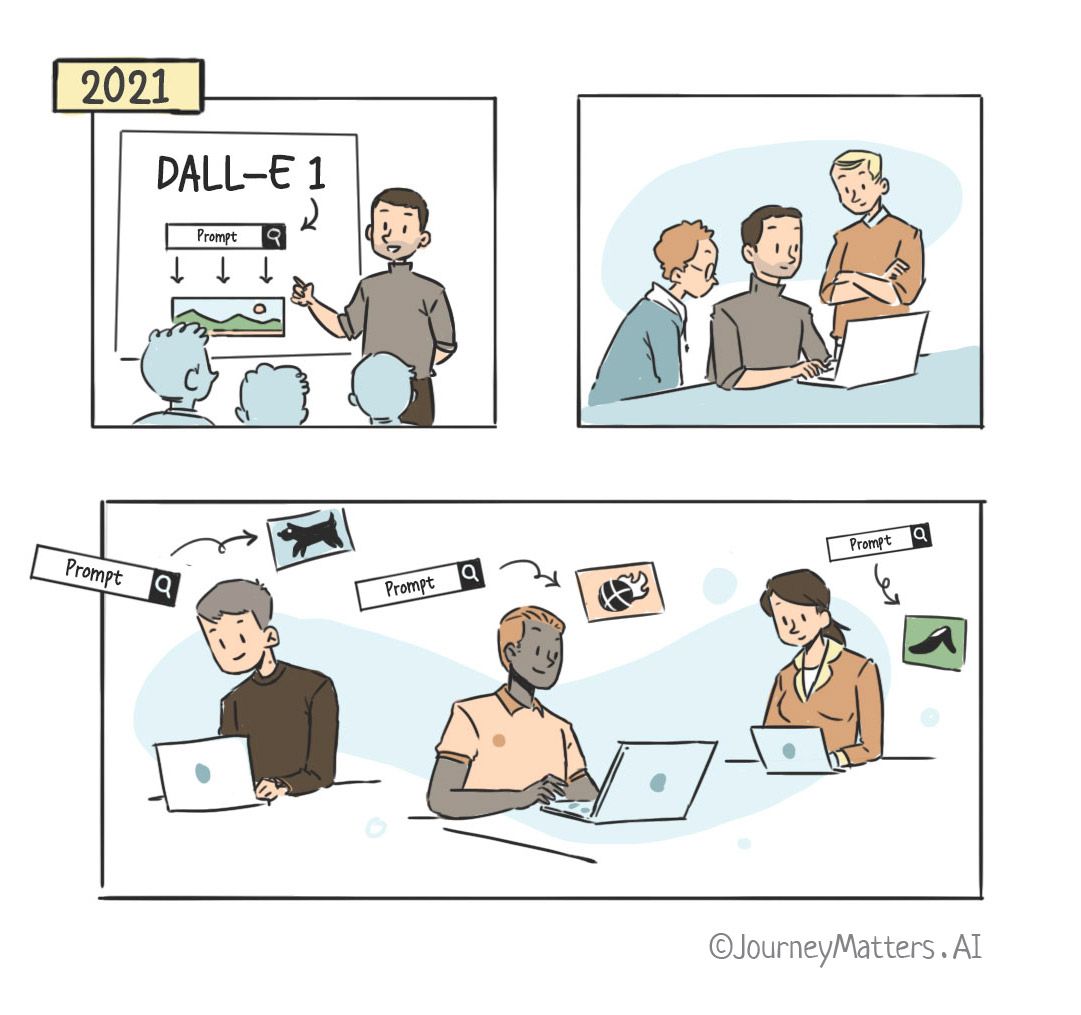

2021: Development of DALL-E 1

Sutskever led OpenAI's invention of DALL-E 1, which is an AI-powered image generation model. It uses a similar architecture and training process as the GPT models but is applied to the generation of images instead of text.

Many of the major image generators today -- DALL-E 2, MidJourney -- owe their origin to DALL-E 1 in the sense that they are based on the same transformer architecture and are trained on similar datasets of images and associated text captions. Additionally, both DALL-E 2 and MidJourney are based on the fine-tuning process from DALL-E 1.

2022: Unveiled ChatGPT to the world

Sutskever helped launch ChatGPT on 30th November '22, which caught the attention of the masses and grew to 1 million users in just 5 days.

Understanding the context of a conversation and producing appropriate responses is one of ChatGPT's key features. The bot remembers the thread of your conversation and bases its subsequent responses on previous questions and answers.

Unlike other chatbots, which are typically limited to pre-programmed responses, ChatGPT can generate responses on the fly, allowing it to have more dynamic and varied conversations.

Elon Musk, who was one of the founders of OpenAI, said, “ChatGPT is scary good. We are not far from dangerously strong AI.”

Endnote

Ilya Sutskever's passion for artificial intelligence has driven his groundbreaking research, which has changed the course of the field. His work in deep learning and machine learning has been instrumental in advancing the state of the art and shaping the future direction of the field.

Despite numerous grand financial opportunities, Sutskever has chosen to pursue his passion and focus on his research; his dedication to his work is exemplary for any researcher.

We have already witnessed Sutskever's impact on our world.

Yet, it feels this is just the start.

Resources

- Sequence to Sequence Learning (proceedings.neurips.cc)

- He said that researchers didn’t believe that neural networks could do translation, so it was a big surprise when they could. (magazine.utoronto.ca)

- Metz, Cade. (2021) Genius makers. New York, Penguin Random House.

- Ilya Sutskever: Deep Learning | Lex Fridman Podcast #94 (YouTube)

- Deep Learning Theory Session. Ilya SutskeverIlya Sutskever (YouTube)

- Improving Language Understanding by Generative Pre-Training (cdn.openai.com)

- Zero-Shot Text-to-Image Generation (mlr.press)

Member discussion